文章目录

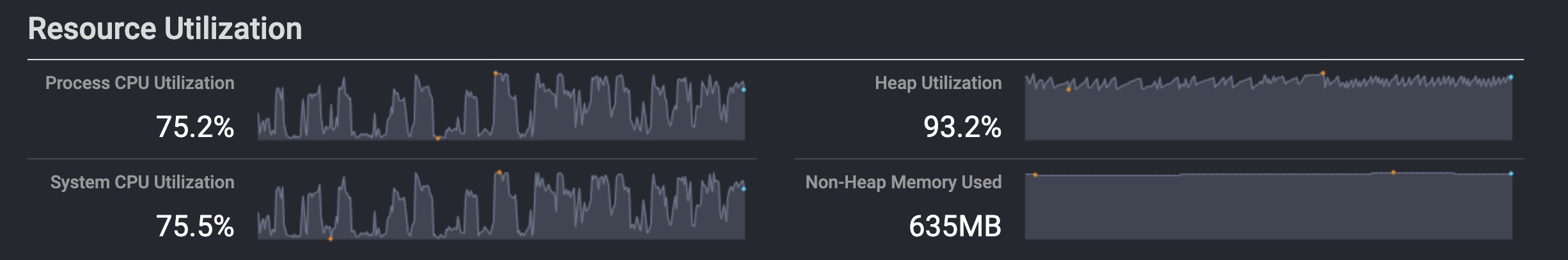

This topic describes tips for tuning parallelism and memory in Presto. The tips are categorized as follows:

Tuning Parallelism at a Task Level

The number of splits in a cluster = node-scheduler.max-splits-per-node * number of worker nodes.

The node-scheduler.max-splits-per-node denotes the target value for the total number of splits that can be running on any worker node. Its default value is 100.

If there are queries submitted in large batches or for connectors that produce many splits, which get completed quickly, then it is better to set a higher value for node-scheduler.max-splits-per-node. The higher value may improve the query latency as it ensures that the worker nodes have sufficient splits to completely engage them.

On the contrary, if you set a very high value, then it may lower the performance as the splits may not be balanced across workers. Typically, set the value so that anytime, there is only one split that is waiting to be processed.

hive.max-initial-splits and hive.max-initial-split-size to achieve higher parallelism.Tuning Parallelism at an Operator Level

The task concurrency denotes the default local concurrency for parallel operators such as JOINS and AGGREGATIONS. Its default value is 16. The value of the task concurrency must be a multiplier of 2. You can increase/reduce the value depending on the query concurrency and worker nodes utilization as described below:

- Lower values are better for clusters running many queries concurrently as the running queries use cluster nodes. In such a case, increasing the concurrency causes context switching and other overheads and thus there is a slow down in the query execution.

- Higher values are better for clusters that run just one query or a few queries.

You can set the operator concurrency at the cluster level using the task.concurrency property. You can also specify the operator concurrency at the session level using the task_concurrency session property.

Tuning Memory

Presto features these three Memory Pools to manage the available resources:

- General Pool

- Reserved Pool

- System Pool

All queries are initially submitted to the General Memory Pool. As long as the General Pool has memory, queries continue to run in it, but once it runs out of memory, the query using highest amount of memory in the General Pool is moved to the Reserved Pool and thereafter, this one query runs in the Reserved Pool while other queries continue to run in the General Pool. While the Reserved Pool is running a query, if the General Pool runs out of memory again, then the query using highest amount of memory in the General pool is moved to the Reserved pool but it will not resume its execution until the current query running in the Reserved Pool finishes.The Reserved Pool can hold multiple queries but it allows only one query to be executed at a given point in time.

The System Pool provides the memory for the operations, whose memory Presto does not track. Network buffers and IO buffers are examples for a System Pool.

注意:从 Presto 0.217 或者 Trion 302 开始,System Pool 已经被移除了,仅剩下 General Pool 和 Reserved Pool 了。参见 Release 0.217、#125 以及 #12257。

This table describes the memory parameters.

| Memory Type | Description | Parameter and default value |

|---|---|---|

| maxHeap | It is the JVM container size. | Defaults to up to 70% of Instance Memory |

| System Memory | It is the overhead allocation. | Defaults to 40% of maxHeap |

| Reserved Memory | In case, General Memory is exhausted and if more memory is required by jobs, Reserved Memory is used by one job at a time to ensure progress until General Memory is available. | query.max-memory-per-node |

| Total Query Memory | It denotes the total tracked memory that is used by the query. It is applicable to Presto 0.208 and later versions. | query.max-total-memory-per-node |

| General Memory | It is the first stop for all jobs. | maxHeap - Reserved Memory - System Memory |

| Query Memory | It is the maximum memory for the job across the cluster. | query_max_memory is the session property andquery.max-memory is the cluster-level property. |

Tips to Avoid Memory Issues¶

Presto delays jobs when there are not enough Split Slots to support the dataset. Jobs fail when there is no sufficient memory to process the query. If any of the below memory values apply to the current environment, then the configuration is not powerful enough and you can expect a job lag and a failure.

| Reserved Memory * Number of Nodes < Peak Job Size | As a first recommendation, increase the Reserved Memory. However, increasing the Reserved Memory can impact the concurrency as the General Pool shrinks accordingly. As a second recommendation, use a larger instance. |

| General Memory * Number of Nodes < Average Job Size * Concurrent Jobs | As a first recommendation, increase the Reserved Memory. However, increasing the Reserved Memory can shrink the General Pool. When it is not possible to shrink the Reserved Pool, use a larger instance. |

| Reserved Memory * Number of Nodes < Query Memory | Adjust the setting |

| Reserved Memory * Number of Nodes < Query Memory Limit | Adjust the setting |

禁用 Reserved Pool

A new experimental configuration property called experimental.reserved-pool-enabled is added to Presto version 0.208 to allow disabling Reserved Pool, which is used to prevent deadlocks when memory is exhausted in the General Pool by promoting the biggest query to Reserved Pool. However, only one query gets promoted to Reserved Pool and queries in General Pool get into the blocked state whenever it becomes full. To avoid this scenario, you can set experimental.reserved-pool-enabled to false for disabling Reserved Pool.

When Reserved Pool is disabled (experimental.reserved-pool-enabled=false), General Pool can take advantage of the memory previously that is allocated for Reserved Pool and support higher concurrency. For avoiding deadlocks when General Pool is full, enable Presto’s OOM killer by setting query.low-memory-killer.policy=total-reservation-on-blocked-nodes. When General Pool is full on a worker node, the OOM killer resolves the situation by killing the query with the highest memory usage on that node. This allows queries with reasonable memory requirements to keep making progress while a small number of high-memory-requirement queries may be killed to prevent them from utilizing cluster resources.

本文原文:Tuning Presto

本博客文章除特别声明,全部都是原创!原创文章版权归过往记忆大数据(过往记忆)所有,未经许可不得转载。

本文链接: 【Presto 性能调优】(https://www.iteblog.com/archives/9933.html)