在《Zookeeper 3.4.5分布式安装手册》、《Hadoop2.2.0完全分布式集群平台安装与设置》文章中,我们已经详细地介绍了如何搭建分布式的Zookeeper、Hadoop等平台,今天,我们来说说如何在Hadoop、Zookeeper上搭建完全分布式的Hbase平台。这里同样选择目前最新版的Hbase用于配合最新版的Hadoop-2.2.0,所以我们选择了Hbase-0.96.0。

1、下载并解压HBase:

[wyp@master Downloads]$ wget http://mirror.bit.edu.cn/apache

/hbase/hbase-0.96.0/hbase-0.96.0-hadoop2-bin.tar.gz

[wyp@master Downloads]$ tar -zxvf hbase-0.96.0-hadoop2-bin.tar.gz

[wyp@master Downloads]$ cd hbase-0.96.0-hadoop2-bin/

[wyp@master hbase-0.96.0-hadoop2]$

2、修改Hbase的一些默认环境配置:

[wyp@master Downloads]$ vim conf/hbase-env.sh # The java implementation to use. Java 1.6 required. export JAVA_HOME=/home/wyp/Downloads/jdk1.7.0_45 (Java的home目录得根据你自己机器设定,如果不知道你机器的 Java home,可以运行which java查看) # Tell HBase whether it should manage it's own instance of Zookeeper or not. export HBASE_MANAGES_ZK=false

HBASE_MANAGES_ZK默认是true,说明是让HBase 单独管理 zookeeper。如果你机器上已经安装好了分布式Zookeeper,那么可以设定为fals;否则就不需要修改。

3、修改hbase-site.xml文件,并在里面添加如下的配置:

[wyp@master Downloads]$ vim conf/hbase-site.xml

<property>

<name>hbase.rootdir</name>

<value>hdfs://master:8020/hbase</value>

</property>

<property>

<name>hbase.master</name>

<value>hdfs://master:60000</value>

</property>

<property>

<name>hbase.cluster.distributed</name>

<value>true</value>

</property>

<property>

<name>hbase.zookeeper.property.clientPort</name>

<value>2181</value>

</property>

<property>

<name>hbase.zookeeper.quorum</name>

<value>master,node1,node2</value>

</property>

<property>

<name>hbase.zookeeper.property.dataDir</name>

<value>/home/wyp/zookeeper</value>

</property>

<property>

<name>hbase.client.scanner.caching</name>

<value>200</value>

</property>

<property>

<name>hbase.balancer.period</name>

<value>300000</value>

</property>

<property>

<name>hbase.client.write.buffer</name>

<value>10485760</value>

</property>

<property>

<name>hbase.hregion.majorcompaction</name>

<value>7200000</value>

</property>

<property>

<name>hbase.hregion.max.filesize</name>

<value>67108864</value>

</property>

<property>

<name>hbase.hregion.memstore.flush.size</name>

<value>1048576</value>

</property>

<property>

<name>hbase.server.thread.wakefrequency</name>

<value>30000</value>

</property>

请注意上面的hbase.rootdir、hbase.zookeeper.quorum以及hbase.zookeeper.property.dataDir的配置;其中hbase.rootdir对应Hadoop的$HADOOP_HOME/etc/hadoop/core-site.xml文件中fs.defaultFS的值(可以参见本博客的《Hadoop2.2.0完全分布式集群平台安装与设置》文章);hbase.zookeeper.quorum的值就是Zookeeper配置的几台机器的hostname,可以参见本博客的《Zookeeper 3.4.5分布式安装手册》;hbase.zookeeper.property.dataDir对应Zookeeper的$ZOOKEEPER/conf/zoo.cfg文件中dataDir属性的值。

4、修改regionservers 文件配置,这个文件是用来指定regionserver的机器的:

[wyp@master conf]$ vim regionservers 在里面添加如下内容,一台机器名一行 到底需要选择什么机器作为regionserver可以根据你自己的情况来决定 master node1 node2

5、同样将配置好的hbase打包,分发到node1、node2中:

[wyp@master Downloads]$ tar -zcf hbase.tar.gz hbase-0.96.0-hadoop2 [wyp@master Downloads]$ scp hbase.tar.gz node1:/home/wyp/Downloads/ [wyp@master Downloads]$ scp hbase.tar.gz node2:/home/wyp/Downloads/

6、分别登录到node1、node2解压刚刚发送的hbase.tar.gz文件:

[wyp@master Downloads]$ ssh node1 Last login: Sun Jan 19 15:17:46 2014 from master [wyp@node1 ~]$ tar -zxf Downloads/hbase.tar.gz [wyp@node1 ~]$ exit logout Connection to node1 closed. [wyp@master Downloads]$

7、分别启动master、regionserver等进程:

在master启动master进程 [wyp@master Downloads]$ $HBASE_HOME/bin/hbase-daemon.sh start master 在node1、node2中启动master进程 [wyp@node1 Downloads]$ $HBASE_HOME/bin/hbase-daemon.sh start regionserver

8、检查Hbase是否成功部署

打开http://master:60010,如果能进去,说明安装成功!

在启动master进程的时候,可能会出现以下的异常信息:

本博客文章除特别声明,全部都是原创!

org.apache.hadoop.ipc.RemoteException(org.apache.hadoop.ipc.RpcServerException):

Unknown out of band call #-2147483647

at org.apache.hadoop.ipc.Client.call(Client.java:1347)

at org.apache.hadoop.ipc.Client.call(Client.java:1300)

...................................

at com.sun.proxy.$Proxy13.getFileInfo(Unknown Source)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at java.lang.reflect.Method.invoke(Method.java:606)

...................................

at com.sun.proxy.$Proxy13.getFileInfo(Unknown Source)

这是因为Hbase0.96中所用的Hadoop jar包和Hadoop 2.2.0中的相关Jar包不一致,请用Hadoop-2.2.0中的share/hadoop文件夹下面的jar包替换Hbase中lib下面的相应包。然后再重启Hbase即可。

原创文章版权归过往记忆大数据(过往记忆)所有,未经许可不得转载。

本文链接: 【Hbase 0.96.0分布式安装手册】(https://www.iteblog.com/archives/902.html)

我有两点不是很明白:

1、Hbase只需要一个master就行了吗?那万一这个master挂了,该怎么办呢?

2、你上面已经说了不使用hbase来管理zookeeper,那么为什么在hbase-site.xml文件中还要设置hbase.zookeeper.property.dataDir 及hbase.zookeeper.quorum。这些都是在zookeeper中配置的。

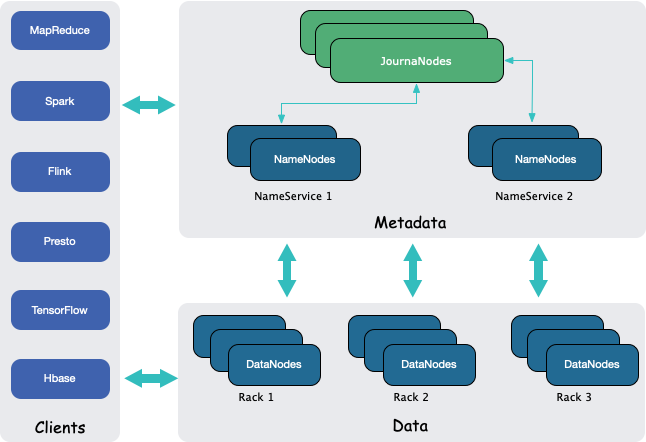

我现在已经使用QJM+ZOOKEEPER实现了HDFS的高可用,现在在安装Hbase,也要用到zookeeper,所以就想用之前的zookeeper。

以上的两点就是我不明白的。希望你能给我解解惑!谢谢!!

1、至少到Hbase 0.96还是只有一个master,不过到了后期版本貌似有相应的措施来处理master单点故障。你可以去看下最新版本的hbase。

2、在hbase-site.xml里面配置那个是告诉Hbase找到你自己配置好的Zookeeper。

多谢!

初次学习hbase,一直出现下面的错误????

你看下这个错误,是版本问题,你的Hbase、Hadoop版本分别是多少?