文章目录

Apache Spark 2.1.0是 2.x 版本线的第二个发行版。此发行版在为Structured Streaming进入生产环境做出了重大突破,Structured Streaming现在支持了event time watermarks了,并且支持Kafka 0.10。此外,此版本更侧重于可用性,稳定性和优雅(polish),并解决了1200多个tickets。以下是本版本的更新:

Core and Spark SQL

Spark官方发布新版本时,一般将Spark Core和Spark SQL放在一起,可见两者的融合越来越紧,Spark SQL中的DataFrame/Dataset会逐步取代RDD API, 成为Spark中最常用的API。如今,Spark生态系统中的MLLib和Spark Streaming正逐步朝DataFrame/Dataset API方向演进。

API更新

1、SPARK-17864:Data type API趋于稳定,即DataFrame和Dataset可用于生产环境,且后续会载保证向后兼容性的前提下,进行演化;

2、SPARK-18351:新增from_json和to_json,允许json数据和Struct对象(DataFrame的核心数据结构)之间相互转换;

3、SPARK-16700:在pyspark中,增加对dict的支持。

性能及稳定性

1、SPARK-17861:改进Spark SQL中的对partition的处理方式。在metastore中缓存partition元信息以解决冷启动和查询下推等问题;

2、SPARK-16523:优化group-by聚集操作的性能。通过引入基于行的hashmap,解决宽数据(列数非常多)的聚集性能低下问题。

其他显着变化

1、SPARK-9876: parquet-mr更新到1.8.1

Programming guides: Spark Programming Guide and Spark SQL, DataFrames and Datasets Guide.

Structured Streaming

API updates

1、SPARK-17346: Kafka 0.10 support in Structured Streaming

2、SPARK-17731: Metrics for Structured Streaming

3、SPARK-17829: Stable format for offset log

4、SPARK-18124: Observed delay based Event Time Watermarks

5、SPARK-18192: Support all file formats in structured streaming

6、SPARK-18516: Separate instantaneous state from progress performance statistics

Stability

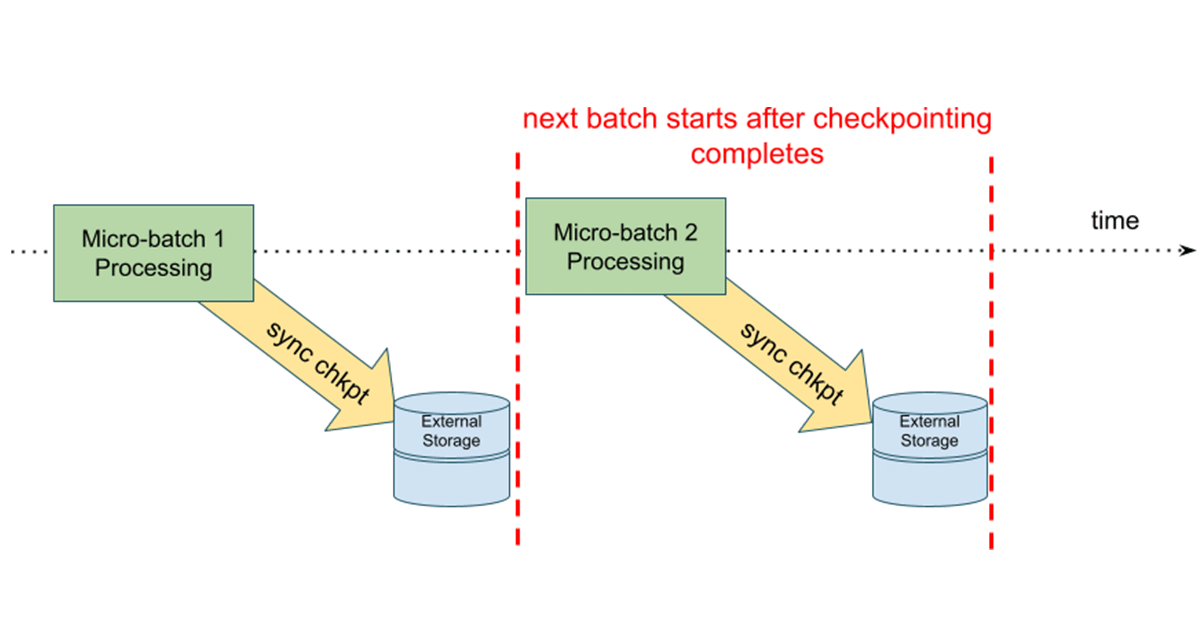

1、SPARK-17267: Long running structured streaming requirements

Programming guide: Structured Streaming Programming Guide.

MLlib

API updates

1、SPARK-5992: Locality Sensitive Hashing

2、SPARK-7159: Multiclass Logistic Regression in DataFrame-based API

3、SPARK-16000: ML persistence: Make model loading backwards-compatible with Spark 1.x with saved models using spark.mllib.linalg.Vector columns in DataFrame-based API

Performance and stability

1、SPARK-17748: Faster, more stable LinearRegression for < 4096 features

2、SPARK-16719: RandomForest: communicate fewer trees on each iteration

Programming guide: Machine Learning Library (MLlib) Guide.

SparkR

The main focus of SparkR in the 2.1.0 release was adding extensive support for ML algorithms, which include:

New ML algorithms in SparkR including LDA, Gaussian Mixture Models, ALS, Random Forest, Gradient Boosted Trees, and more

Support for multinomial logistic regression providing similar functionality as the glmnet R package

Enable installing third party packages on workers using spark.addFile (SPARK-17577).

Standalone installable package built with the Apache Spark release. We will be submitting this to CRAN soon.

Programming guide: SparkR (R on Spark).

GraphX

SPARK-11496: Personalized pagerank

Programming guide: GraphX Programming Guide.

Deprecations

MLlib

SPARK-18592: Deprecate unnecessary Param setter methods in tree and ensemble models

Changes of behavior

Core and SQL

SPARK-18360: The default table path of tables in the default database will be under the location of the default database instead of always depending on the warehouse location setting.

SPARK-18377: spark.sql.warehouse.dir is a static configuration now. Users need to set it before the start of the first SparkSession and its value is shared by sessions in the same application.

SPARK-14393: Values generated by non-deterministic functions will not change after coalesce or union.

SPARK-18076: Fix default Locale used in DateFormat, NumberFormat to Locale.US

SPARK-16216: CSV and JSON data sources write timestamp and date values in ISO 8601 formatted string. Two options, timestampFormat and dateFormat, are added to these two data sources to let users control the format of timestamp and date value in string representation, respectively. Please refer to the API doc of DataFrameReader and DataFrameWriter for more details about these two configurations.

SPARK-17427: Function SIZE returns -1 when its input parameter is null.

SPARK-16498: LazyBinaryColumnarSerDe is fixed as the the SerDe for RCFile.

SPARK-16552: If a user does not specify the schema to a table and relies on schema inference, the inferred schema will be stored in the metastore. The schema will be not inferred again when this table is used.

Structured Streaming

SPARK-18516: Separate instantaneous state from progress performance statistics

MLlib

SPARK-17870: ChiSquareSelector now accounts for degrees of freedom by using pValue rather than raw statistic to select the top features.

Known Issues

SPARK-17647: In SQL LIKE clause, wildcard characters ‘%’ and ‘_’ right after backslashes are always escaped.

SPARK-18908: If a StreamExecution fails to start, users need to check stderr for the error.

原创文章版权归过往记忆大数据(过往记忆)所有,未经许可不得转载。

本文链接: 【Apache Spark 2.1.0正式发布】(https://www.iteblog.com/archives/1952.html)